Professional Experience expand all

- 2020-present Meta FAIR, Research Software Engineer 2020-present

Melo Park, California.

- 2019-2020 University of Edinburgh, Postdoctoral Researcher 2019-2020

Developed industry-grade differential testing for IBM, integrated into their OpenJ9 and Eclipse JVM testing services. Created a new machine learning program representation for analysis and optimization. Funded by IBM and Tetramax.

- 2018 DeepMind, Software Engineer Intern 2018

Exploring deep reinforcement learning for optimizing instruction fusion of TensorFlow XLA program graphs. Designed and implemented a black-box iterative compilation approach for TPUs, GPUs, and CPUs using genetic algorithms to explore the optimization space. Demonstrated reduced memory footprint on realworld models.

- 2018 Google, Software Engineer Intern 2018

Created a representative benchmark generator for Google’s Protocol Buffer usage. Working in the Google Wide Profiling team to synthesise benchmarks for Google compute. The project involved company-wide workload characterization through to datacenter-scale low level performance analysis of profiles and hardware counters.

- 2016-2018 Codeplay Software, Software Engineer (Part Time) 2016-2018

Contributed to Tensorflow and Eigen. Implemented GPU memory management for expression trees. Compile time scheduling and kernel fusion for expression trees on GPUs using future standards for heterogeneous parallelism. Extensive C++ meta-programming. Integrated compiler fuzzers into continuous testing tooling.

- 2012-2013 Intel Corporation, Software Engineer Intern 2012-2013

Patched ioctl subsystem in Linux kernel. Developed tools for Intel GPU assembly programming. Implemented GTK+ support for Wayland display server. Fixed usability bugs in GNOME desktop applications. Developed 3D particle effects engine. Numerous open source project contributions.

- 2010-2017 Freelance, Web developer 2010-2017

Full-stack development for small businesses, including graphic design and branding. Clients have included musicians, beauty rooms, and conferences. Frontend experience with JavaScript; backend development using Clojure, Node.js, PHP, MySQL, PostgreSQL, and Jekyll. Experience with Bootstrap framework and WordPress CMS.

- 2008 Rolls Royce Holdings plc, Summer Placement 2008

Summer week placement in the Design Methods Group.

Education expand all

- 2020 PhD Informatics, University of Edinburgh 2020

Thesis Deep Learning for Compilers Advisors Hugh Leather and Pavlos Petoumenos Deep learning over programs. Developed novel machine learning methods for random program generation, compiler optimisations, and representative benchmarking. Applications for heterogeneous parallelism, compiler testing, and adaptive performance tuning. Awarded 3 grants, 3 best papers, 9 invited talks, and 7 posters.

- 2015 MSc by Research, University of Edinburgh 2015

Grade Distinction (Thesis: 85%) Thesis Autotuning Stencils Codes with Algorithmic Skeletons Advisors Hugh Leather and Pavlos Petoumenos Runtime adaptive tuning for heterogeneous parallel systems, targeting a high level DSL for multi-GPU stencil programs. Demonstrated automatic 1.33× speedup over human-expert tuned programs through machine learning over distributed training sets.

- 2014 MEng Electronic Engineering and Computer Science, Aston Univeristy 2014

Grade First Class Honours (Thesis: 90%) Thesis Protein Isoelectric Point Database Advisors Ian Nabney Created a search engine and API for a novel molecular biochemistry dataset with ~1k monthly active users. Targeting bioinformatics research and released open source.

Awards

- 2022 Best Paper Nominee, MLArchSys 2022

- 2022 Distinguished Paper Award, CGO. 29% acceptance rate 2022

- 2020 SICSA PhD Award for Best Dissertation in Scotland 2019-2020. 2020

- 2019 HiPEAC Travel Grant 2019

- 2018 Distinguished Paper Award, ISSTA. 112 submissions, 28% acceptance rate 2018

- 2017 Best Paper Award, PACT. 109 submissions, 23% acceptance rate 2017

- 2017 Best Paper Award, CGO. 116 submissions, 22% acceptance rate 2017

- 2015 PhD studentship, EPSRC grant EP/L01503X/1 2015

- 2014 IET Institute of Engineering & Technology Prize 2014

- 2009 Arkwright Scholarship, Rolls Royce Plc 2009

- 2009 EES Engineering Education Scheme of England 2009

- 2008 AESSEAL Design Innovation Award 2008

Publications expand all

- 2022 BenchPress: A Deep Active Benchmark Generator (PACT) 2022

Authors F. Tsimpourlas, P. Petoumenos, M. Xu, C. Cummins, K. Hazelwood, A. Rajan, H. Leather Publication PACT BenchPress is a directed program synthesizer for compiler benchmarks. Using active learning, it ranks compiler features based on their significance and produces executables that target them.

- 2022 Q-gym: An Equality Saturation Framework for DNN Inference Exploiting Weight Repetition (PACT) 2022

Authors C. Fu, H. Huang, B. Wasti, C. Cummins, R. Baghdadi, K. Hazelwood, Y. Tian, J. Zhao, H. Leather Publication PACT The high computation cost is one of the key bottlenecks for adopting deep neural networks (DNNs) in different hardware. Q-gym consists of a compiler which leverages equality saturation to generate computation expressions, and various parallelization methods to accelerate DNN inference on different hardware.

- 2022 Autophase V2: Towards Function Level Phase Ordering Optimization (MLArchSys Best Paper Nominee) 2022

Authors M. Almakki, A. Izzeldin, Q. Huang, A. Haj Ali, C. Cummins Publication MLArchSys Phase ordering offers improved program performance by specializing compiler optimizations to invidual programs. In this work we propose further specializing the phase ordering for each function. Results show up to 9% improvement.

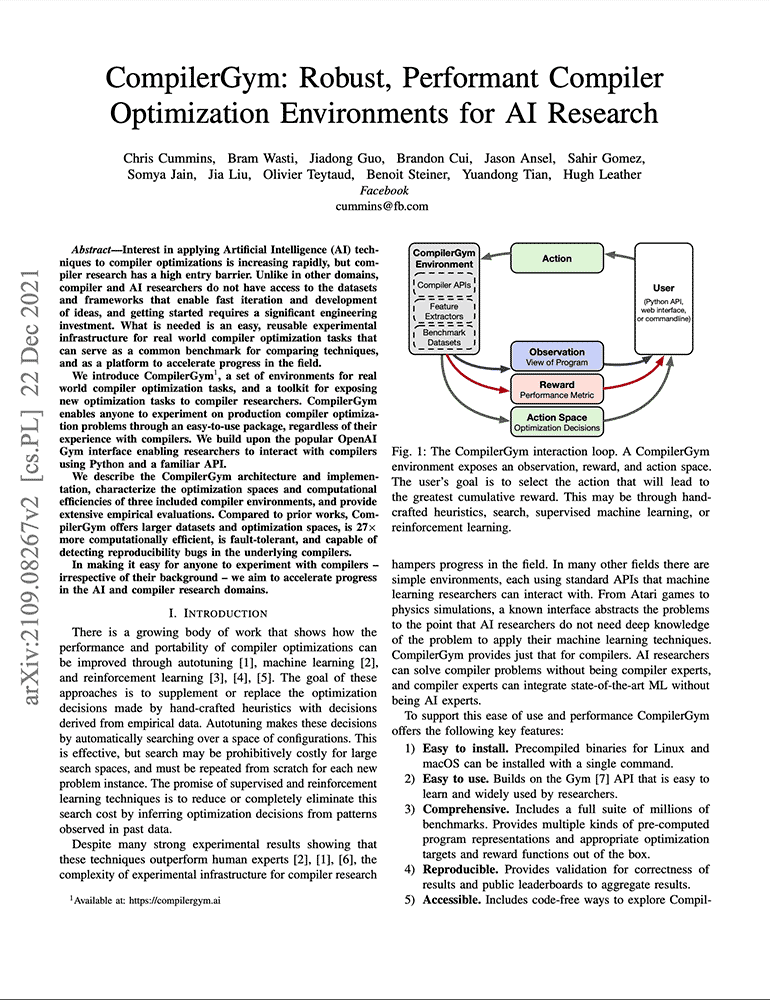

- 2022 CompilerGym: Robust, Performant Compiler Optimization Environments for AI Research (CGO Distinguished Paper) 2022

Authors C. Cummins, B. Wasti, J. Guo, B. Cui, J. Ansel, S. Gomez, S. Jain, J. Liu, O. Teytaud, B. Steiner, Y. Tian, H. Leather Publication CGO We aim to lower the barrier-to-entry to compiler optimization research. We present CompilerGym, a suite of tools that removes the significant engineering investment required try out new ideas on production compiler problems.

- 2022 Profile Guided Optimization without Profiles: A Machine Learning Approach 2022

Authors N. Rotem, C. Cummins Publication arXiv Profile guided optimization is an effective technique for improving the optimization ability of compilers based on dynamic behavior, but collecting profile data is expensive, cumbersome, and requires regular updating to remain fresh. We present a novel statistical approach to inferring branch probabilities that improves the performance of programs that are compiled without profile guided optimizations.

- 2021 Caviar: An E-graph Based TRS for Automatic Code Optimization 2021

Authors S. Kourta, A. Namani, F. Tayeb, K. Hazelwood, C. Cummins, H. Leather, R. Baghdadi Publication arXiv Accelerating e-graph construction is crucial for making the use of e-graphs practical in compilers. In this paper, we present Caviar, an e-graph-based TRS for proving expressions within compilers. Caviar is a fast (20x faster than base e-graph TRS) and flexible TRS.

- 2021 Learning Space Paritions for Path Planning (NeurIPS) 2021

Authors K. Yang, T. Zhang, C. Cummins, B. Cui, B. Steiner, L. Wang, J. Gonzalez, D. Klein, Y. Tian Publication NeurIPS Path planning, the problem of efficiently discovering high-reward trajectories, often requires optimizing a high-dimensional and multimodal reward function. We develop a novel formal regret analysis for when and why an adaptive region partitioning scheme works. We also propose a new path planning method which improves the function value estimation within each sub-region, and uses a latent representation of the search space.

- 2021 ProGraML: A Graph-based Program Representation for Data Flow Analysis and Compiler Optimizations (ICML) 2021

Authors C. Cummins, Z. Fisches, T. Ben-Nun, T. Hoefler, M. O’Boyle, H. Leather Publication ICML Most machine learning methods cannot replicate even the simplest of the abstract interpretations of data flow analysis that are critical to making good optimization decisions. To benchmark current and future learning techniques for compiler analyses we introduce an open dataset of 461k Intermediate Representation files for LLVM, covering five source programming languages, and 15.4M corresponding data flow results. We formulate data flow analysis as an MPNN and show that standard analyses can be learned, yielding improved performance on downstream compiler optimization tasks.

- 2021 Value Learning for Throughput Optimization of Deep Neural Networks (MLSys) 2021

Authors B. Steiner, C. Cummins, H. He, H. Leather Publication MLSys Scheduling deep learning workloads by predicting the expected performance of a partial schedule using an LSTM and carefully engineered features. Achieves 2.6x speedup over Halide and 1.5x speedup over TVM in orders of magnitude less search time.

- 2020 Deep Data Flow Analysis 2020

Authors C. Cummins, Z. Fisches, T. Ben-Nun, T. Hoefler, H. Leather, M. O’Boyle Publication arXiv Most machine learning methods cannot replicate even the simplest of the abstract interpretations of data flow analysis that are critical to making good optimization decisions. To benchmark current and future learning techniques for compiler analyses we introduce an open dataset of 461k Intermediate Representation files for LLVM, covering five source programming languages, and 15.4M corresponding data flow results. We formulate data flow analysis as an MPNN and show that standard analyses can be learned, yielding improved performance on downstream compiler optimization tasks.

- 2020 Program Graphs for Machine Learning (NeurIPS) 2020

Authors C. Cummins, Z. Fisches, T. Ben-Nun, T. Hoefler, H. Leather, M. O’Boyle Publication ML for Systems Workshop, NeurIPS We present ProGraML - Program Graphs for Machine Learning - a language-independent, portable representation of program semantics that enables analysis through deep learning. We show that standard analyses can be learned, significantly outperforming state-of-the-art approaches.

- 2020 Value Function Based Performance Optimization of Deep Learning Workloads (NeurIPS) 2020

Authors B. Steiner, C. Cummins, H. He, H. Leather Publication ML for Systems Workshop, NeurIPS Scheduling deep learning workloads by predicting the expected performance of a partial schedule using an LSTM and carefully engineered features. Achieves 2.6x speedup over Halide and 1.5x speedup over TVM in orders of magnitude less search time.

- 2020 Machine Learning in Compilers: Past, Present and Future (FDL) 2020

Authors H. Leather, C. Cummins Publication FDL, Kiel, Germany This paper provides a retrospective of machine learning in compiler optimisation from its earliest inception, through some of the works that set themselves apart, to today’s deep learning, finishing with our vision of the field’s future.

- 2020 ProGraML: Graph-based Deep Learning for Program Optimization and Analysis 2020

Authors C. Cummins, Z. V. Fisches, T. Ben-Nun, T. Hoefler, and H. Leather Publication arXiv Novel graph-based representation for machine learning over programs. We capture whole-program control, data, and call flow at the IR-level, equipping machine learning models to replicate the types of compiler analyses critical to optimization. We set new state-of-the-art performance in two downstream tasks - heterogenous device mapping and algorithm classification.

- 2020 Deep Learning in Compilers (thesis) 2020

Authors C. Cummins Publication PhD Thesis, University of Edinburgh Deep learning over programs. Developed novel machine learning methods for random program generation, compiler optimisations, and representative benchmarking. Applications for heterogeneous parallelism, compiler testing, and adaptive performance tuning.

- 2019 A Case Study on Machine Learning for Synthesizing Benchmarks (MAPL) 2019

- 2018 Compiler Fuzzing through Deep Learning (ISSTA Distinguished Paper) 2018

Authors C. Cummins, P. Petoumenos, A. Murray, and H. Leather Publication ISSTA (28% acceptance rate), Amsterdam, Netherlands Unsupervised machine learning to derive program generators for compiler fuzz testing. Implemented in 100x less code than state-of-the-art program generator, and 3.03x faster. Discovered 67 new bugs in OpenCL compilers, many of which are now fixed.

- 2018 DeepSmith: Compiler Fuzzing through Deep Learning (ACACES) 2018

- 2017 End-to-end Deep Learning of Optimization Heuristics (PACT Best Paper) 2017

Authors C. Cummins, P. Petoumenos, Z. Wang, and H. Leather Publication PACT (23% acceptance rate), Portland, Oregon Learning optimization heuristics directly from raw source code, without the need for feature extraction. Exceeds performance of state-of-the art predictive models using hand crafted features, and can transfer knowledge gained from one optimization task to another, even if the learned tasks are dissimilar.

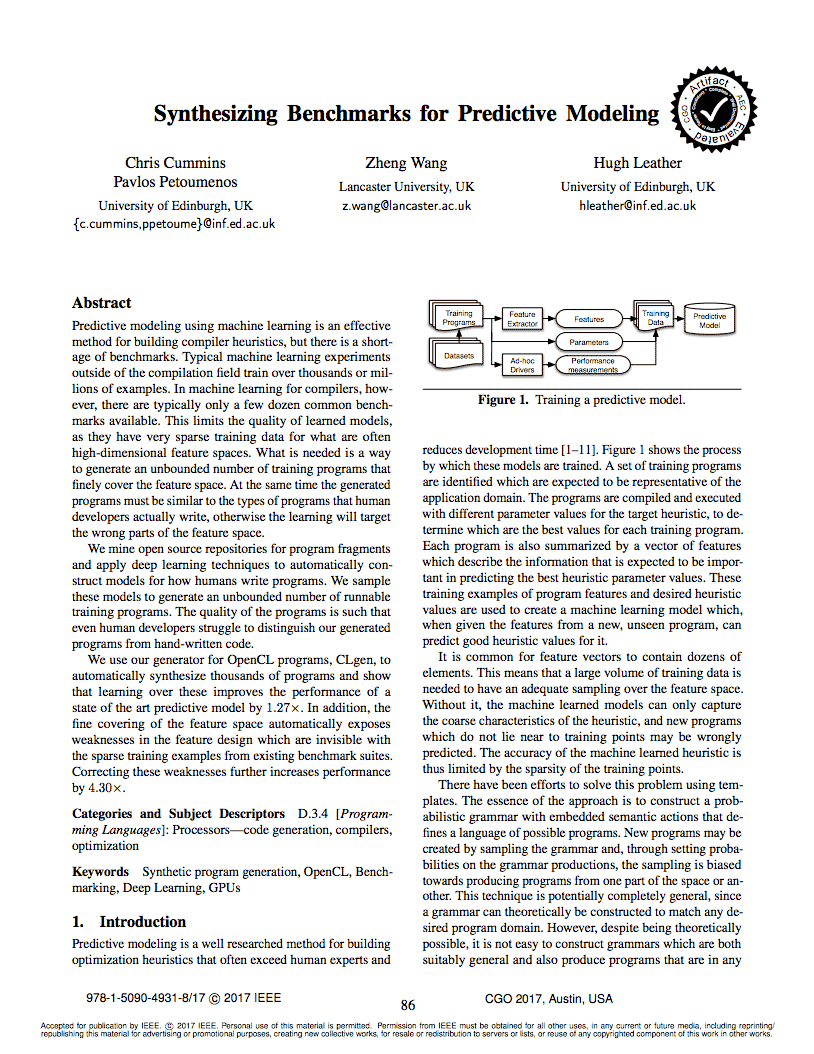

- 2017 Synthesizing Benchmarks for Predictive Modeling (CGO Best Paper) 2017

Authors C. Cummins, P. Petoumenos, Z. Wang, and H. Leather Publication CGO (22% acceptance rate), Austin, Texas Deep learning over massive codebases from GitHub to generate benchmark programs. Automatically synthesizes OpenCL kernels which are indistinguishable from hand-written code, and improves state-of-the-art predictive model performance by 4.30×.

- 2016 Autotuning OpenCL Workgroup Sizes (ACACES) 2016

Authors C. Cummins, P. Petoumenos, M. Steuwer, and H. Leather Publication ACACES (extended abstract), Fiuggi, Italy Machine learning-enabled autotuning of multi-GPU OpenCL workgroup sizes. Static tuning achieves only 26% of the maximum performance, my approach achieves 92%.

- 2016 Towards Collaborative Performance Tuning of Algorithmic Skeletons (HLPGPU) 2016

Authors C. Cummins, P. Petoumenos, M. Steuwer, and H. Leather Publication HLPGPU, HiPEAC, Prague A distributed framework for dynamic prediction of optimisation parameters using machine learning. Automatically exceeds human experts by 1.22x.

- 2016 Autotuning OpenCL Workgroup Size for Stencil Patterns (ADAPT) 2016

Authors C. Cummins, P. Petoumenos, M. Steuwer, and H. Leather Publication ADAPT, HiPEAC, Prague Three methodologies to autotune stencil patterns using machine learning. Speedups of 3.79× over the best possible static size, 94% of the maximum performance.

- 2015 PIP-DB: The Protein Isoelectric Point Database (Bioinformatics) 2015

Authors E. Bunkute, C. Cummins, F. Crofts, G. Bunce, I. T. Nabney, and D. R. Flower Publication Bioinformatics, 31(2), 295-296 An open source search engine of protein isoelectric points. Provides public access to bioinformatics data from the literature for comparison and benchmarking purposes.

Invited Talks expand all

- 2020 ProGraML: Graph-based Deep Learning for Program Optimization and Analysis (PACT) 2020

- 2018 Compiler Fuzzing through Deep Learning (Codeplay Software) 2018

- 2018 Machine Learning for Compilers (ISAGT) 2018

Duration 30 minutes A brief overview of some of ways machine learning has been used to help both compiler developers and users.

- 2018 Using Deep Learning to Generate Human-like Code (Facebook, Menlo Park) 2018

Duration 60 minutes An overview of my research on deep learning for synthetic program generation, and the challenges of semantic programming language modeling using neural networks.

- 2018 End-to-end Deep Learning of Compiler Hueristics (Google, Mountain View) 2018

Duration 30 minutes My slide deck for PACT’17.

- 2018 End-to-end Deep Learning of Compiler Hueristics (Google, Sunnyvale) 2018

Duration 60 minutes My slide deck for PACT’17.

- 2017 Using Deep Learning to Generate Human-like Code (SPLS) 2017

Duration 30 minutes An overview of my research on deep learning for synthetic program generation, and the challenges of semantic programming language modeling using neural networks.

- 2016 Machine Learning & Compilers (Codeplay Software) 2016

- 2016 Building an AI that Codes (Ocado Technology) 2016

- 2016 All the OpenCL on GitHub: Teaching an AI to code (Amazon Development Center) 2016

Duration 15 minutes First presentation of using deep learning to model OpenCL programming language semantics from mined open source code.

Other Academic Activities

| Committees | MLArchSys'22 Organizing Commitee (2022), COSMIC'19 General Co-chair (2019), PACT'18 HotCRP Chair (2018), and CGO'18 Web Chair (2018). |

| Peer Reviews | PLDI (2022), MLSys (2022), CC (2022), ACM TACO (2020), CGO (2018), ACM TACO (2016), LCTES (2016), and CGO (2016). |

| Posters | ISSTA (2018), ACACES (2018), PPar (2017), Google (2016), PPar (2016), ACACES (2016), PLDI (2016), HiPEAC (2016), Google (2015), and PPar (2015). |

Key Technical Skills

| Python | |

| C/C++ | |

| Git | |

| GNU / Linux | |

| Bash | |

| Jupyter | |

| Bazel | |

| SQL | |

| TensorFlow | |

| PyTorch | |

| HTML+CSS+JS | |

| Java | |

| OpenCL | |